Our infrastructure must be highly scalable due to fluctuating traffic throughout the day and year, especially during peak periods like Christmas, when traffic can increase by over 50%. With Google Cloud’s native autoscaler, we used to experience overprovisioned nodes, leaving over 40% of headroom – resources our team never used but had to pay for. This prompted the search for an autoscaling solution to adjust the resources provisioned for clusters and workloads dynamically. That is how we found Cast AI.

The results in a wrap

We use Cast AI’s autoscaling capabilities at the node and workload levels to scale cost-efficiently. We use automated scaling and bin-packing mechanisms to provision just enough resources to ensure excellent performance without incurring a high cloud bill.

With automation at both the node and workload levels, we have been able to achieve:

🌟 30% cloud cost savings achieved across Dev and Pre-prod clusters.

🌟 Cost-efficient node autoscaling with minimum headroom.

🌟 Full control over automation mechanisms.

Bin packing Spot VMs in the Dev and Pre-prod cluster for 30% savings

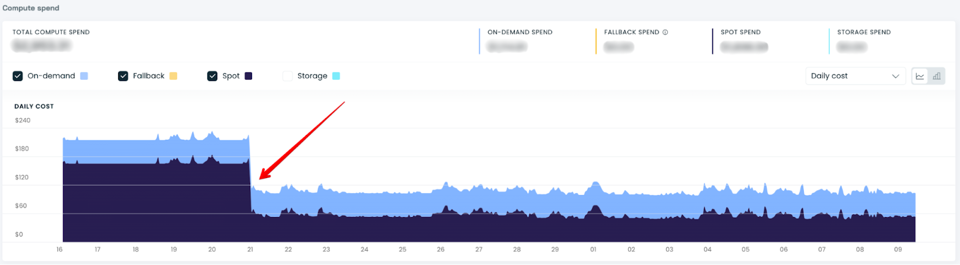

Since implementing Cast AI, Heureka has seen a 30% drop in compute costs in the Dev cluster. This was achieved by bin packing Spot-friendly workloads (stateless workloads that tolerate interruptions) and quickly removing the empty Spot nodes to drive down the number of provisioned CPUs.

The Cast AI autoscaler unlocked more savings by moving Spot-friendly workloads already deployed on Spot VMs to cheaper families while maintaining service uptime and application performance.